What is ProstT5?

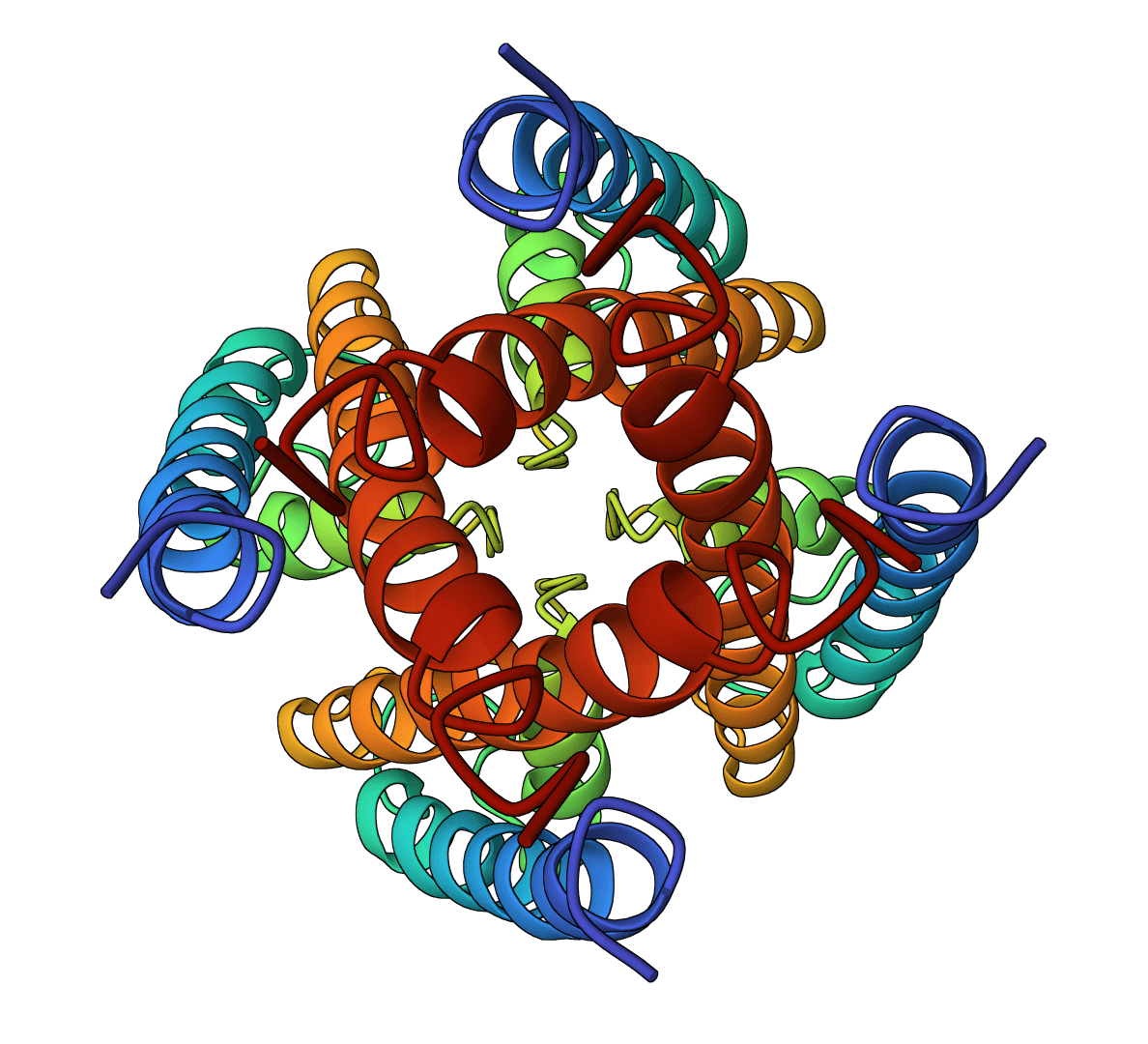

ProstT5 is a protein language model that translates bidirectionally between amino acid sequences and 3Di structural tokens. Developed by the Rostlab at TU Munich, the model encodes protein structure into a sequence-like representation, enabling structure-based analyses without computing explicit 3D coordinates.

The name reflects its architecture and purpose: Prost (Protein structure) + T5 (the transformer model it builds upon). ProstT5 extends ProtT5-XL-U50—a T5 model trained on billions of protein sequences—by fine-tuning on 17 million AlphaFold-predicted structures to learn the mapping between amino acids and 3Di tokens.

The 3Di structural alphabet

3Di is a structural alphabet introduced by FoldSeek that converts three-dimensional protein coordinates into a one-dimensional sequence of 20 letters. Each letter describes the local structural environment of a residue based on its interactions with neighboring residues in 3D space.

The 20-state alphabet was deliberately designed to mirror the 20 natural amino acids, allowing structure information to be processed using the same algorithms developed for sequence analysis. When working with ProstT5, amino acid sequences use uppercase letters (e.g., MVLSPADKTNVK) while 3Di tokens use lowercase (e.g., dddvvvpppqqs).

This conversion enables:

- Structure comparison using sequence alignment algorithms: Tools like MMseqs2 can compare 3Di strings thousands of times faster than traditional structural alignment methods

- Remote homology detection: Proteins with similar folds but low sequence identity can be identified through their 3Di representations

- Structure-informed language modeling: ProstT5 learns relationships between structure and sequence by treating both as "languages"

How to use ProstT5 online

ProteinIQ hosts ProstT5 on GPU infrastructure, providing immediate access to sequence-structure translation without installing PyTorch or downloading model weights.

Input

| Format | Description |

|---|---|

| Protein sequence | FASTA format or raw amino acid sequence (uppercase letters) |

| 3Di tokens | Lowercase 3Di string for inverse folding mode |

| PDB ID | Fetch sequence directly from RCSB (e.g., 1UBQ) |

Up to 10 sequences can be processed in a single job.

Settings

| Setting | Description |

|---|---|

Translation mode | Direction of translation. Sequence to 3Di predicts structural tokens from amino acids. 3Di to Sequence performs inverse folding. Extract embeddings outputs per-residue representations. |

Use half precision (FP16) | Enables 16-bit floating point for faster inference. Disable for maximum numerical precision. |

Output

The output depends on the selected mode:

| Mode | Output |

|---|---|

| Sequence to 3Di | 3Di token string (lowercase letters) for each input sequence |

| 3Di to Sequence | Amino acid sequence(s) compatible with the input structure |

| Extract embeddings | 1024-dimensional vector per residue (downloadable as NPY) |

How ProstT5 works

ProstT5 uses a T5 encoder-decoder architecture initialized from ProtT5-XL-U50, which was pre-trained on protein sequences using span-based denoising. The model was then fine-tuned in two phases:

-

Structural alphabet learning: The original ProtT5 denoising objective was applied to both amino acids and 3Di tokens, teaching the model the new structural vocabulary while preserving its understanding of sequence patterns

-

Translation training: The model learned to translate between amino acid sequences and 3Di representations using 17 million high-quality AlphaFold predictions from the AlphaFold Database

During inference, directional prefixes guide the model:

<AA2fold>for sequence-to-structure translation or amino acid embedding<fold2AA>for structure-to-sequence translation or 3Di embedding

Applications

Remote homology detection

3Di strings predicted by ProstT5 can be used with FoldSeek to find structurally similar proteins. On the SCOPe40 benchmark, ProstT5-predicted 3Di tokens approach the performance of 3Di extracted from actual PDB structures while significantly outperforming sequence-only methods like MMseqs2.

The key advantage: ProstT5 generates 3Di representations in milliseconds, three orders of magnitude faster than computing 3D structures with AlphaFold and then extracting 3Di tokens.

Inverse folding

Given a 3Di structural representation, ProstT5 can generate amino acid sequences that would fold into that structure. This capability complements dedicated inverse folding tools like ProteinMPNN and ESM-IF1, though with different trade-offs—ProstT5 operates on the compressed 3Di representation rather than full atomic coordinates.

Transfer learning

The 1024-dimensional embeddings capture both sequence and structural information, making them useful features for downstream machine learning tasks such as:

- Function prediction

- Binding site identification

- Protein classification

- Variant effect prediction

Limitations

-

3Di is lossy: The structural alphabet captures local geometry but loses some fine-grained atomic detail. Predictions are less precise than full 3D structure prediction methods.

-

Single-chain focus: ProstT5 was trained primarily on monomeric proteins. Multi-chain complexes and protein-ligand interactions are not explicitly modeled.

-

No confidence scores: Unlike AlphaFold or ESMFold, ProstT5 does not output per-residue confidence metrics for its predictions.

-

Sequence length limits: Very long proteins (>1000 residues) may require chunking or may exceed memory constraints.

Related tools

- FoldSeek: Structure search using 3Di tokens—ProstT5's output can be used directly as input

- ESM-2: Protein language model for sequence embeddings (sequence-only, no structural information)

- ESMFold: Structure prediction from sequence (outputs 3D coordinates rather than 3Di tokens)

- ProteinMPNN: Inverse folding from PDB structures with higher accuracy but requiring full atomic coordinates

- ESM-IF1: Alternative inverse folding approach using geometric deep learning