What is ESM-2?

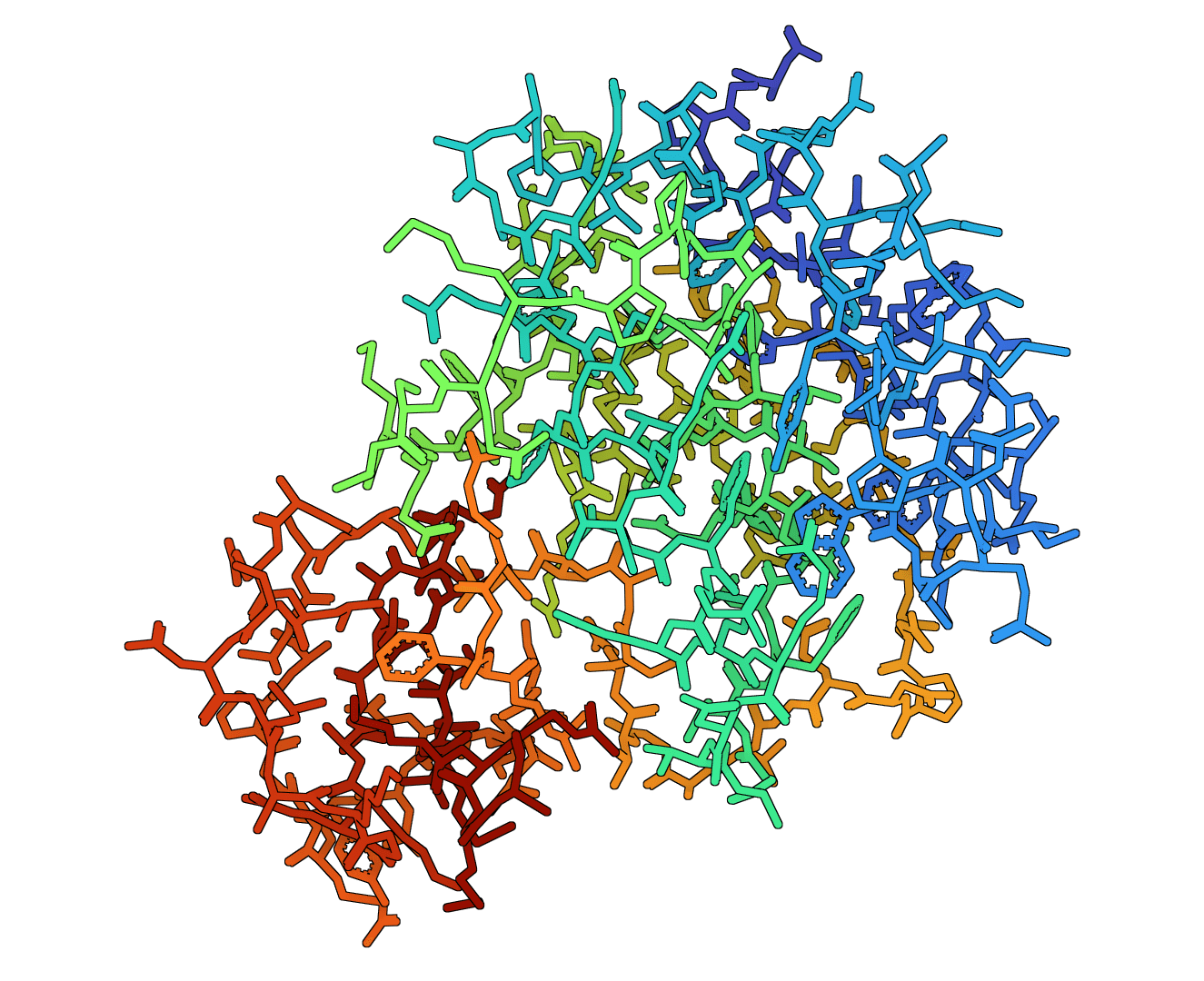

ESM-2 is a 650 million parameter protein language model developed by Meta AI. Trained on 250 million protein sequences from UniRef, it learns the patterns and grammar of protein sequences without any structural supervision.

The model generates 1280-dimensional embedding vectors for each amino acid in your sequence. These embeddings encode rich information about evolutionary relationships, structural context, and functional properties - all derived from sequence alone.

ESM-2 embeddings are widely used as input features for downstream machine learning tasks. Common applications include protein function prediction, variant effect prediction, protein-protein interaction prediction, and clustering proteins by similarity.

How does ESM-2 work?

ESM-2 uses a transformer architecture similar to large language models, but trained on protein sequences instead of text. The model processes sequences using self-attention, where each amino acid attends to all other positions to build context-aware representations.

Masked language modeling

During training, random amino acids are masked and the model predicts their identity based on surrounding context. This forces the model to learn meaningful representations - proteins with similar functions or structures develop similar embedding patterns.

Embedding extraction

When you run ESM-2, it produces a matrix of embeddings with shape (sequence_length, 1280). Each row represents one amino acid position. For sequence-level tasks, we provide mean-pooled embeddings that average across all positions into a single 1280-dimensional vector.

Inputs & settings

Representation layer

Transformer models have multiple layers, each capturing different levels of abstraction. Layer 33 (the final layer) captures the most refined representations and works best for most tasks. Earlier layers may be useful for specific applications requiring less processed features.

Mean pooling

When enabled, we generate an additional file containing the mean of all per-residue embeddings. This single 1280-dimensional vector represents the entire sequence and is useful for sequence classification, clustering, or similarity search.

Understanding the results

ESM-2 outputs embeddings in NPY format, the standard NumPy array format used in Python machine learning workflows.

Per-residue embeddings

The primary output has shape (L, 1280) where L is your sequence length. Load it with numpy.load('embeddings.npy') and use directly as input features for downstream models.

Mean embeddings

The optional mean-pooled output has shape (1280,). Use these for comparing entire sequences - compute cosine similarity between mean embeddings to find functionally related proteins.

Use cases

ESM-2 embeddings serve as powerful features for transfer learning. Pre-compute embeddings for your protein dataset, then train lightweight classifiers or regressors on top.

Clustering proteins by embedding similarity often reveals functional groupings. Proteins with similar embeddings tend to share structural or functional properties, even without obvious sequence homology.

For variant effect prediction, compare embeddings of wild-type and mutant sequences. Large embedding differences often correlate with functional impact.

Limitations

ESM-2 works with standard amino acids only. Non-standard residues and post-translational modifications are not directly represented.

Very long sequences (>1000 residues) require more memory and may need chunking strategies. The model handles sequences up to 1024 tokens efficiently.

Embeddings capture evolutionary patterns from natural proteins. Designed or synthetic proteins without natural homologs may have less informative representations.

Related tools

ESMfold uses ESM-2 embeddings internally to predict 3D protein structures directly from sequence. If you need structure prediction rather than embeddings, use ESMfold.

ESM-IF1 performs the inverse task - generating sequences from 3D structures. Use ESM-IF1 for structure-based protein design.

Based on: Lin et al. (2022) "Language models of protein sequences at the scale of evolution enable accurate structure prediction" bioRxiv 10.1101/2022.07.20.500902